A newly released report from the House Judiciary Committee reveals a coordinated effort by European Union regulators to pressure major technology companies into enforcing censorship standards that extend far beyond Europe’s borders.

The findings, drawn from thousands of internal documents and communications, detail a long-running strategy to influence global content moderation policies through regulatory coercion and the threat of punishment under Europe’s Digital Services Act (DSA).

The Committee’s latest publication, “The EU Censorship Files, Part II,” coincides with a scheduled February 4 hearing titled “Europe’s Threat to American Speech and Innovation: Part II.”

We obtained a copy of the report for you here.

According to the materials, European officials have been meeting privately with social media companies since at least 2015 to “adapt their terms and conditions” to align with EU political priorities, including restricting certain kinds of lawful political expression in the United States.

Internal records from TikTok, then-Twitter, and other firms show that the Commission’s so-called “voluntary” DSA election guidelines were in fact treated as mandatory conditions for doing business in Europe.

Companies were told to intensify content moderation ahead of elections and report compliance measures to EU officials.

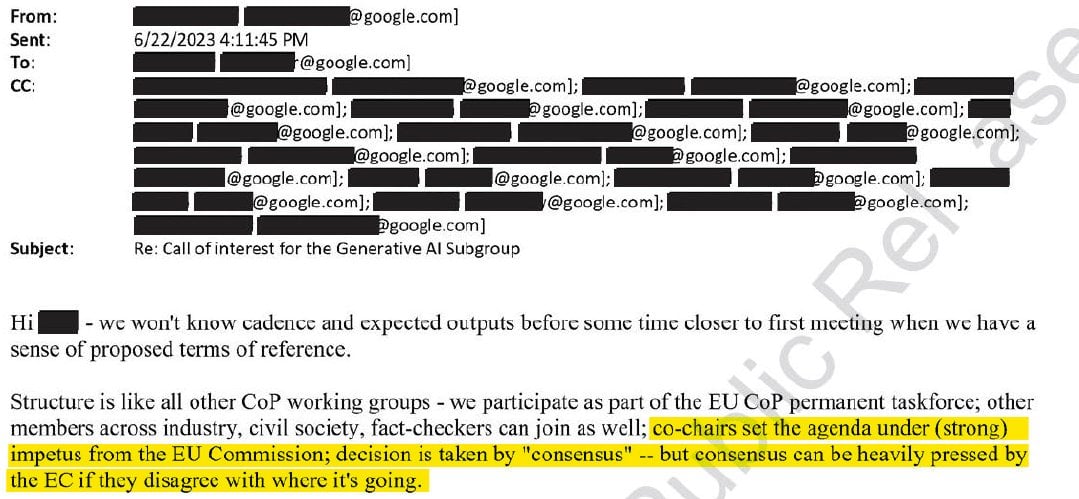

Internal Commission guidance acknowledged that platforms were not formally bound to follow the election guidelines word for word.

At the same time, the documents make clear that deviation was only acceptable if companies could demonstrate that any alternative approach achieved outcomes at least as effective as the Commission’s preferred mitigation measures.

Because the Commission retained authority to judge whether those alternatives were sufficient, the discretion functioned more as a compliance test than a genuine choice.

Platforms faced a narrow path: adopt the guidance as written or persuade the regulator that their substitute controls met an undefined but regulator-determined standard.

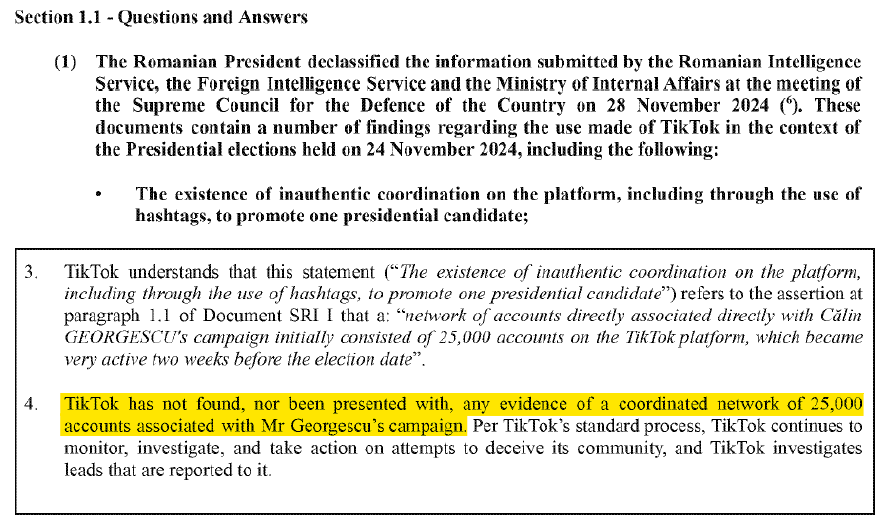

In Romania, declassified national intelligence documents cited in the report call into question claims of Russian interference that had prompted a court to overturn the 2024 presidential election results.

TikTok told the European Commission that it had found “no evidence” of any coordinated network tied to the candidate in question, contradicting assertions made by Romanian authorities.

Despite this, the report shows EU regulators continued to use “inauthentic coordination” claims as justification for tightening their election-related moderation directives.

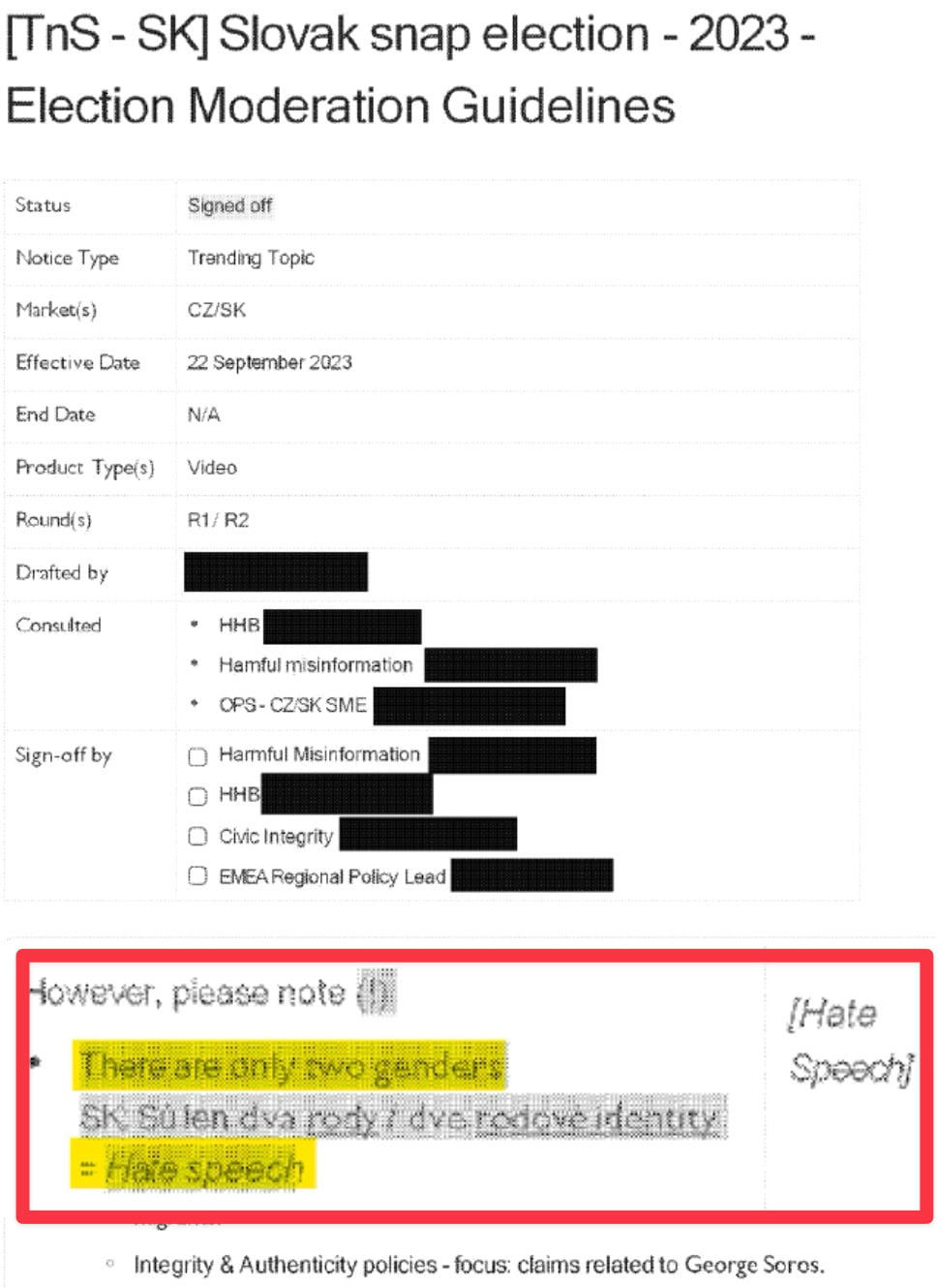

The documents also indicate that after meetings with EU officials, TikTok began suppressing lawful conservative statements on topics such as gender identity.

One moderation log highlighted a directive to label posts claiming that “there are only two genders” as “harmful misinformation.”

Beyond domestic European elections, EU Vice President Věra Jourová traveled to California ahead of the 2024 US election to discuss the enforcement of these rules with US-based technology companies.

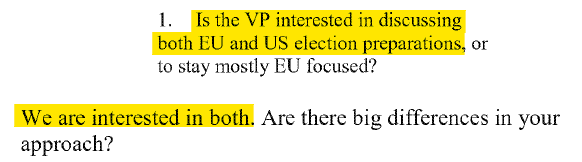

Notes from meetings with US-based technology companies show that European officials were asked whether their engagement was limited to elections within the European Union.

The response recorded in the documents was brief: “We are interested in both.” The remark indicates that discussions with platforms were not confined to European electoral processes, but extended more broadly to content moderation policies with global reach.

According to the Committee, the censorship campaign has operated continuously through dozens of “disinformation” meetings between 2022 and 2024.

The pressure reached its peak once the DSA took effect, with the Commission instructing firms to conduct “continuous review of community guidelines.”

Internal records depict a process in which the Commission determines the scope of discussion, channels platforms toward a shared mitigation framework, and evaluates compliance, leaving companies with limited practical discretion.

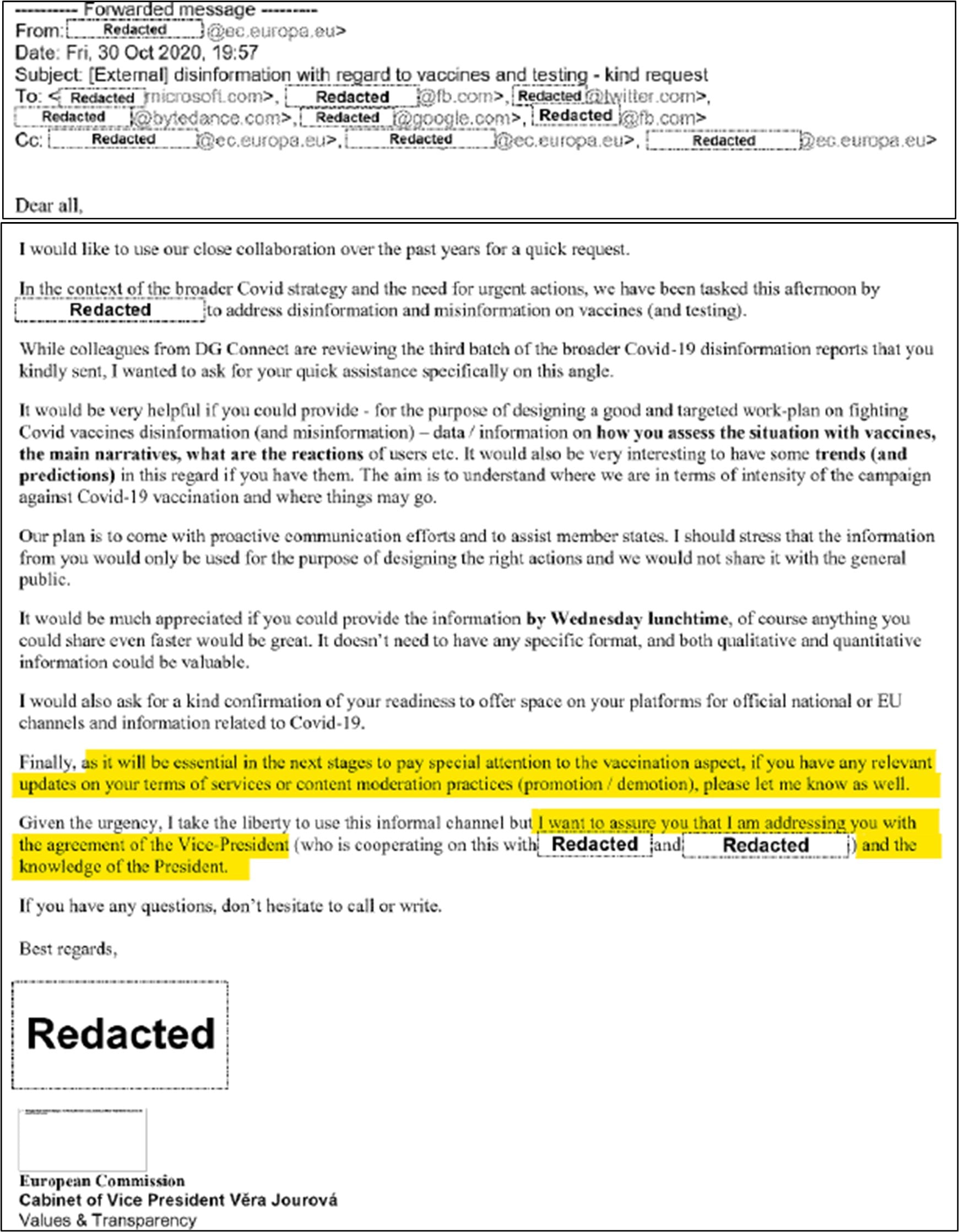

This influence has not been limited to electoral content. Earlier emails reveal EU officials urging companies to police discussion of COVID-19 vaccines, particularly campaigns for children in the United States.

In 2020, senior EU figures, including President Ursula von der Leyen and Jourová, asked companies to remove or demote posts questioning official pandemic narratives.

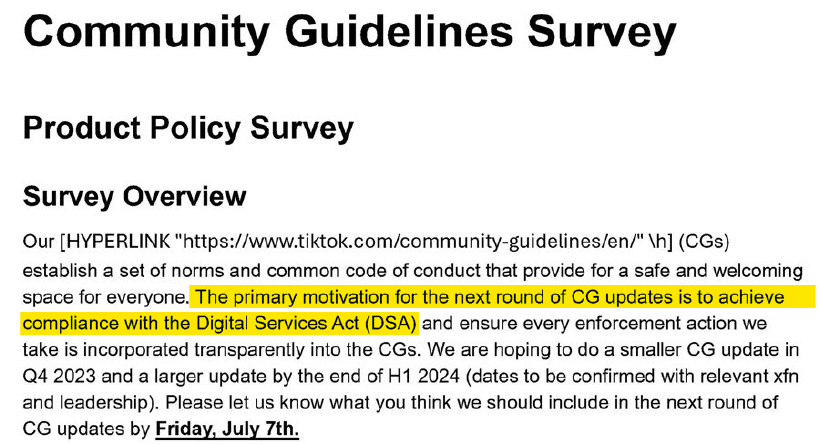

By 2024, TikTok had rewritten its Community Guidelines to comply with the Digital Services Act, introducing new global rules banning “marginalizing speech” and “civic harm misinformation.”

These categories reach far beyond European law, effectively applying EU speech restrictions worldwide. The Committee contends that this change means because of Europe’s censorship law, TikTok censors true information in the United States.

The House Judiciary Committee argues that the European Union has exported its censorship framework internationally by exploiting tech companies’ global moderation systems.

Internal platform documentation confirms that once adopted, Community Standards are enforced globally rather than on a country-by-country basis, meaning policy changes made to satisfy European regulators affect users everywhere.

The hearing on February 4 will examine how these foreign regulatory pressures have shaped US online speech, and whether legislative safeguards are needed to protect Americans’ First Amendment rights from overseas influence.